regularization machine learning meaning

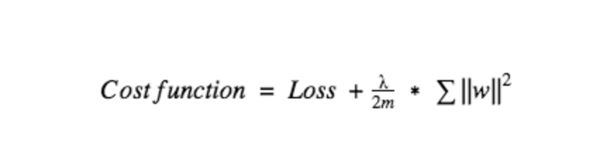

Objective function with regularization. The concept of regularization is widely used even outside the machine learning domain.

Weight Decay Explained Papers With Code

In general regularization involves augmenting the input information to enforce generalization.

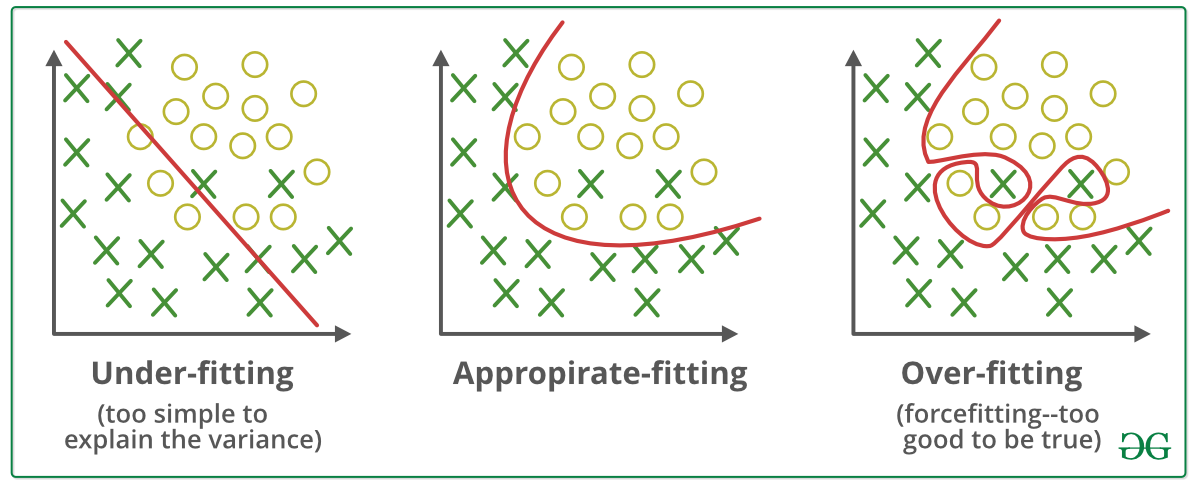

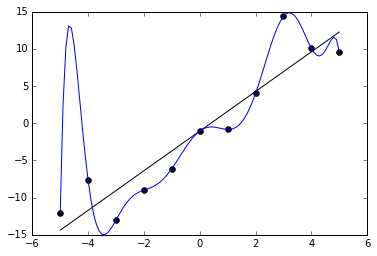

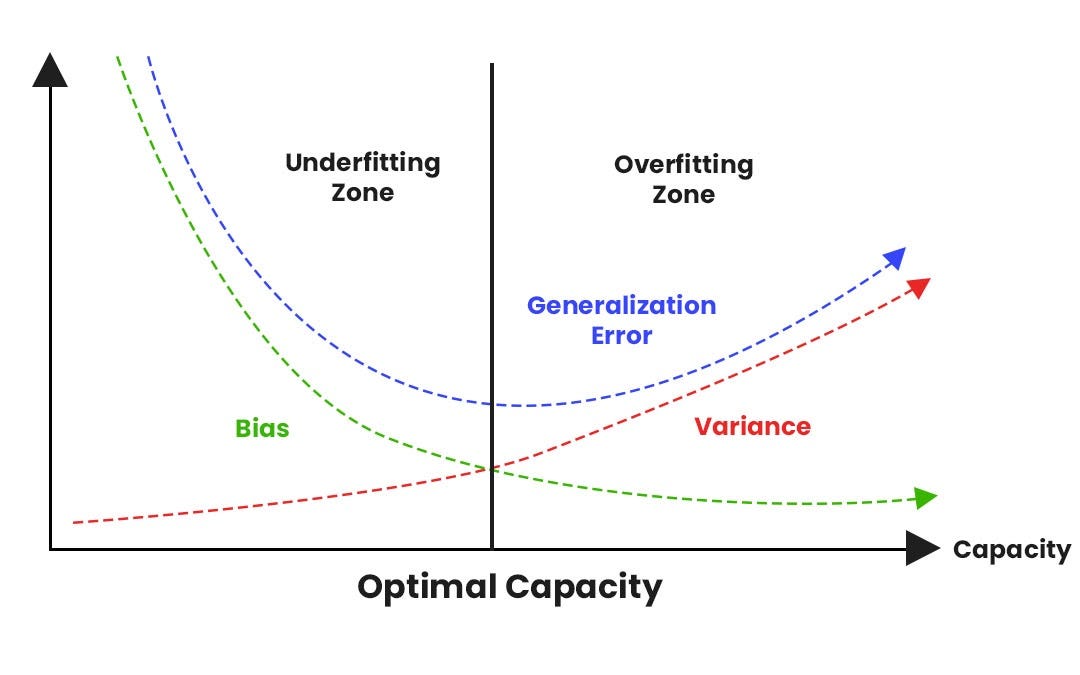

. Overfitting is a phenomenon which occurs when. The formal definition of regularization is as follows. The regularization parameter in machine learning is λ.

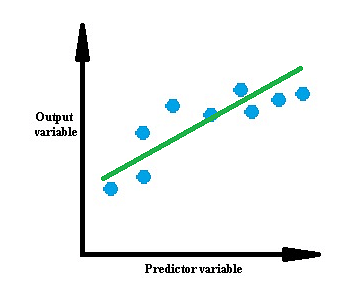

One of the most important issues in machine learning is overfitting. Regularization in Deep Learning teaches you. Regularization is one of the techniques that is used to control overfitting in high flexibility models.

While regularization is used with many different machine learning. In the above equation L is any loss function and F denotes the Frobenius norm. MLOps Machine Learning Operations umfasst eine Reihe von Praktiken die maschinelles Lernen DevOps und Data Engineering kombinieren.

Welcome to this new post of Machine Learning ExplainedAfter dealing with overfitting today we will study a way to correct overfitting with regularization. When an ML model understands specific patterns and the noise generated from training data. L1 regularization It is another common form of.

It tries to impose a higher penalty on the variable having higher values and hence it controls the. In Lasso regression the model is. Regularization is a technique which is used to solve the overfitting problem of the machine learning models.

We have a tendency to use regularization to stop overfitting. L2 Machine Learning Regularization uses Ridge regression which is a model tuning method used for analyzing data with multicollinearity. The regularization parameter in machine learning is λ and has the following features.

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. Machine Learning professionals are familiar with something called overfitting. Regularization is one of the most important concepts of machine learning.

It imposes a higher penalty on the variable having higher values and hence it controls the vigor of the penalty term. In mathematics statistics finance 1 computer science particularly in machine learning and inverse problems regularization is a process that changes the result answer to be simpler. In other terms regularization means the discouragement of learning a more complex or more.

In machine learning regularization is a procedure that shrinks the co-efficient towards zero. It is a technique to prevent the model from overfitting by adding extra information to it. Also known as Ridge Regression it adjusts models with overfitting or underfitting by adding a penalty equivalent to the sum of the squares of the.

Machine Learning Does L1 Regularization Always Generate A Sparse Solution Data Science Stack Exchange

What Is Regularization In Machine Learning The Freeman Online

Regularization Part 3 Elastic Net Regression Regression Machine Learning Elastic

Implementing Drop Out Regularization In Neural Networks Tech Quantum

What Is Overfitting In Deep Learning 10 Ways To Avoid It

Regularization In Machine Learning Geeksforgeeks

Quickly Master L1 Vs L2 Regularization Ml Interview Q A

How Regularization Can Improve Your Machine Learning Algorithms Practical Artificial Intelligence

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

Regularization In Machine Learning Regularization In Java Edureka

Back To Machine Learning Basics Regularization

Regularization In Machine Learning Simplilearn

Regularization An Overview Sciencedirect Topics

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

What Is Regularizaton In Machine Learning

Improving Deep Neural Networks Hyperparameter Tuning Regularization And Optimization Coursera